So, Tesla had it’s AI day last week and as it usually happens with anything related to Elon, there has been major hype and reaction about the Robot that has been presented. Motive of the event is to attract best talent to work for Tesla and to convince the engineers / developers that company is closer to achieving their visionary goals.

In this piece, I will aim to breakdown the event for new business developments for Tesla and my thoughts on it.

There are 3 major components that Tesla is currently working on, which are complementary to each other and we’ll take a closer look on each one of them.

FSD (Full Self Driving) - What goes into designing a FSD car?

Dojo Super Computer - Why does tesla need a supercomputer?

Humanoid Robot - What will be the use case and challenges?

FSD (Full Self Driving)

There are 2 elements to making autonomous driving successful. One of it happens at Car Level (real time) and the other happens at data centers (say, tesla HQ).

There are 2 things that need to happen at Car level → Car need to see it’s surroundings (Data Collection) and also be able to infer what to do (Data Processing).

For data collection, companies use different tools like Cameras, Sensors, Radar and Lidar. Tesla only relies on Camera Streams for data collection and has removed Radars from their new models. Company believes Radar and Lidar increases the costs with no extra visibility benefits.

2nd part is FSD unit. Tesla designed their own SOC (System on Chips) from scratch for it to be fully optimized for autonomous driving which also helps in writing software to fully utilize the potential of this unit. This is essentially the brain of the car. The two processing units that you see are designed to create redundancy in decisions. The purpose of these chips is to receive the information from cameras and analyze it independently to decide a plan. If both plans agree to each other, car will take action according to it. The whole SOC consists of CPUs, GPUs, NPUs. Each NPU (Neural Processing Unit) has 32MB SRAM which helps to store temporary results and help reduce the overall power consumption of SOC. It can perform 144 tops (Trillion operations per second) for 72W.

Camera streams that go through their neural networks which run on the car and produces all the outputs to form the world model around the car and the planning software drives the car based on that. Categorically, two data elements neural network has to predict - the occupancy and the lanes or objects.

A multi-camera video neural network that predicts the full physical occupancy of the world around the car which constitutes elements like trees, walls, buildings, cars, etc. It predicts them along with their future motion.

On top of the base level of geometry, there are semantic layers in order to navigate the roadways and to figure out different kind of lanes and how are they connected, language tech is being used. To achieve this, raw video streams that come into the networks, go through a lot of processing and then outputs the full kinematic state like positions, velocities, acceleration with minimal post processing.

Once the neural network has processed the information, Planner needs to decide on what to do. Timing is of important factor here and to plan with relation to each object having multiple future actions in real time is a difficult task. Tesla uses the framework called interaction search which is basically a parallelized research over a bunch of different trajectories, which reduces time to action in μs as comparing to 1-5ms per action when working though traditional optimization approach (which doesn’t scale well on large number of interactions)

All of these neural networks running in the car together produce the vector space (3D model of world around) and then the planning system operates on top of this coming up with trajectories that avoid collisions or smoothly make progress towards the destination using a combination of model based optimization plus neural network that helps optimize it to be really fast.

Moving to the 2nd part of puzzle.

Let’s say a Martian comes here to drive for the 1st time. He doesn’t know if he can park in the middle of road or not, nor does he know about the traffic signal or one way routes, or the speed limit, footpaths or bridge. Does it make sense for him to figure that out in real time, when he’s driving at 100 km/hr.

No, Right? Same goes for FSD Chip.

Someone, Somewhere has to provide “a sense or knowledge“ to the processing unit so that it will be able to figure out the correct plan of action in milliseconds. That defined sense or knowledge is called ‘Algorithm’.

To train those neural networks needs a lot of computing power. Tesla has expanded training infra by ~40-50% to about 14,000 GPUs across multiple training clusters in US.

To train the data requires labelling of objects and surroundings. Process of labelling has been evolved for tesla over the years. Currently, raw sensor data is being run through on servers which take few hours to distill the information into labels that train the in-car neural network.

On top of this, they also have a simulation system which is synthetically designed to train for the scenario that are rare and unlikely to reproduce physically.

All the data points go through the data engine pipeline where initially trained baseline model ,with some data, ship it to the car to see the failures and data from fleets gets sorted for the cases where it fails to provide the correct labels and add the data to the training set. This iterative process fixes the issues and is being done for every task that runs in the car.

A lot of this trained data and neural networks get updated in the data centers and finally send over through air to the FSD Computer in the cars. They have trained 75,000 neural network models in last one year (~1 model per 8 min), which gets evaluated on large clusters, out of which 281 are shipped to car which improved the performance of car.

DOJO Super-Computer

The biggest roadblock in training a large set of neural network is computing power and time. Elon stated previously that there are GPUs that could do the work but the requirement in cost and time are astronomical. To be able to really efficient and scale their training data model, they have to build their own infrastructure.

Currently the data runs on 14,000 GPUs where 10,000 GPUs are used for training and 4,000 for Auto-labelling and videos are stored in 30 PB (peta-byte) of distributed managed video cache. They can add GPUs up-to a certain stage after which it hampers the performance. What tesla needed was it’s own AI accelerator which will have size and bandwidth to deliver all data efficiently to the lowest processing unit.

In 2021, Tesla introduced the concept for their own supercomputer (More like AI Accelerator) with the name DOJO, which is designed to focus on training neural networks.

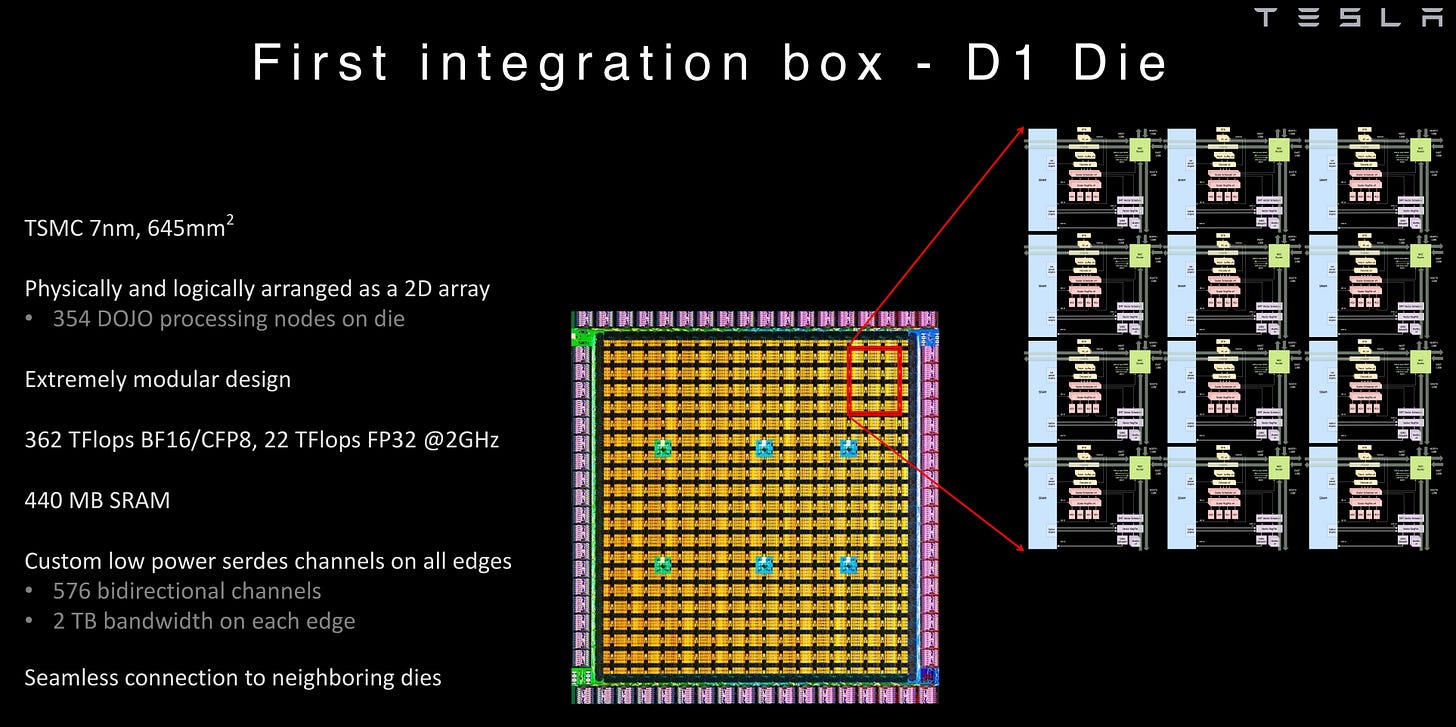

Idea was to create a chip which is specifically designed to optimize the training of neural networks. Tesla’s smallest Unit D1 is a full fledged Core. It is 645mm2 build with TSMC’s 7nm tech. 354 such cores are arranged in a 2D array consisting of total 440 MB SRAM.

Tesla has a manufacturing contract with TSMC who build these chips and put through tests. The ones who pass tests are called 'known good die'. 25 of those known good chips are arranged in a 5 x 5 square and packaged with integrated fan-out wafer tech (which helps preserving Bandwidth) from TSMC to create a Training Tile. Each of those 25 chips can talk to each other at 2 TB/sec.

Tesla was looking for the most efficient way to deliver the power and they went with vertical power delivery which is not common practice. It helps in optimizing for power loss. It’s all in one package where heat comes out at the top, then we have computing plate with 25 chips, the interface and the cooling system at the bottom.

Dojo, in short aim to become a seamless compute plane with globally addressable very fast memory and all connected together with uniform high bandwidth and low latency. Now, this training tile has 25 D1 with high bandwidth and it can be scaled to any number of additional tiles by just connecting them together.

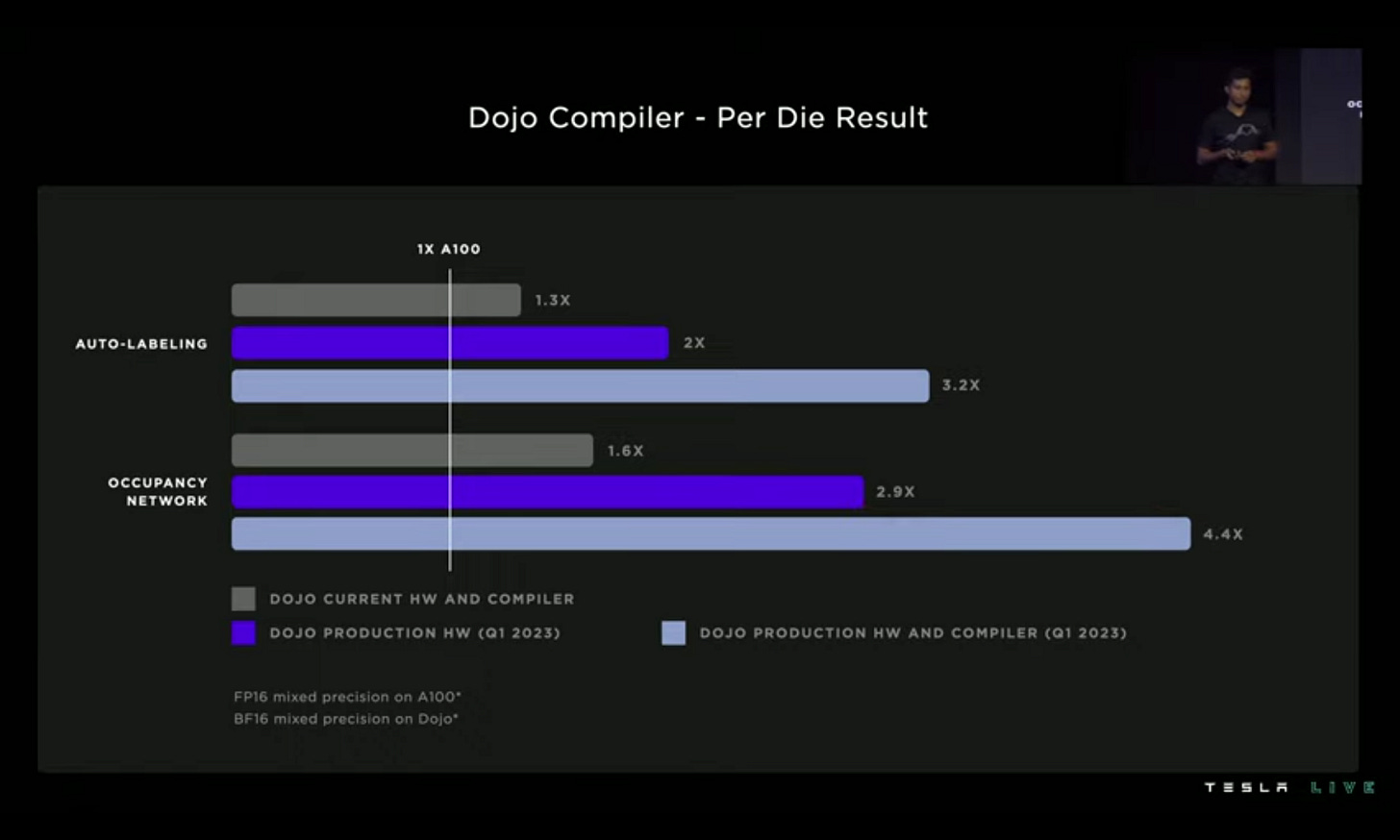

The results they’ve shown per Die is incredible. Auto-Labeling and Occupancy network consist of ~50% of the training data. In terms of latency, 24 GPUs gives 150us latency while 25 D1 (DOJO) gave 5us of latency.

They’ll be able to replace 6 GPU Boxes with Single D1 tile and it will costs less than 1 GPU Box. Also, time to solution will bring from months to weeks. Currently 72 GPU ranks into 4 DOJO Cabinets. These 4 DOJO cabinets will be 1st exapod (timeline by Q1 2023) and it'll have 2.5x auto-labelling capacity.

Tesla Bot : Optimus

After last year teasing about the tesla bot, this event has started with prototype of Tesla Bot walking on stage. Offstage, It was also able to do some tasks like moving boxes or watering plants. From what I infer, Tesla's long term aim is to design robot to be multi-purpose and multi-functional which can replace some (or most) of the human labor and also be able to understand and think for itself.

To be able replace humans, they need a bot which uses power low enough to operate for long time, cheap enough to make sense, in a form factor to able to perform tasks and most importantly intelligent enough to make decisions. This problem is extremely hard and many companies are trying to find a solution for.

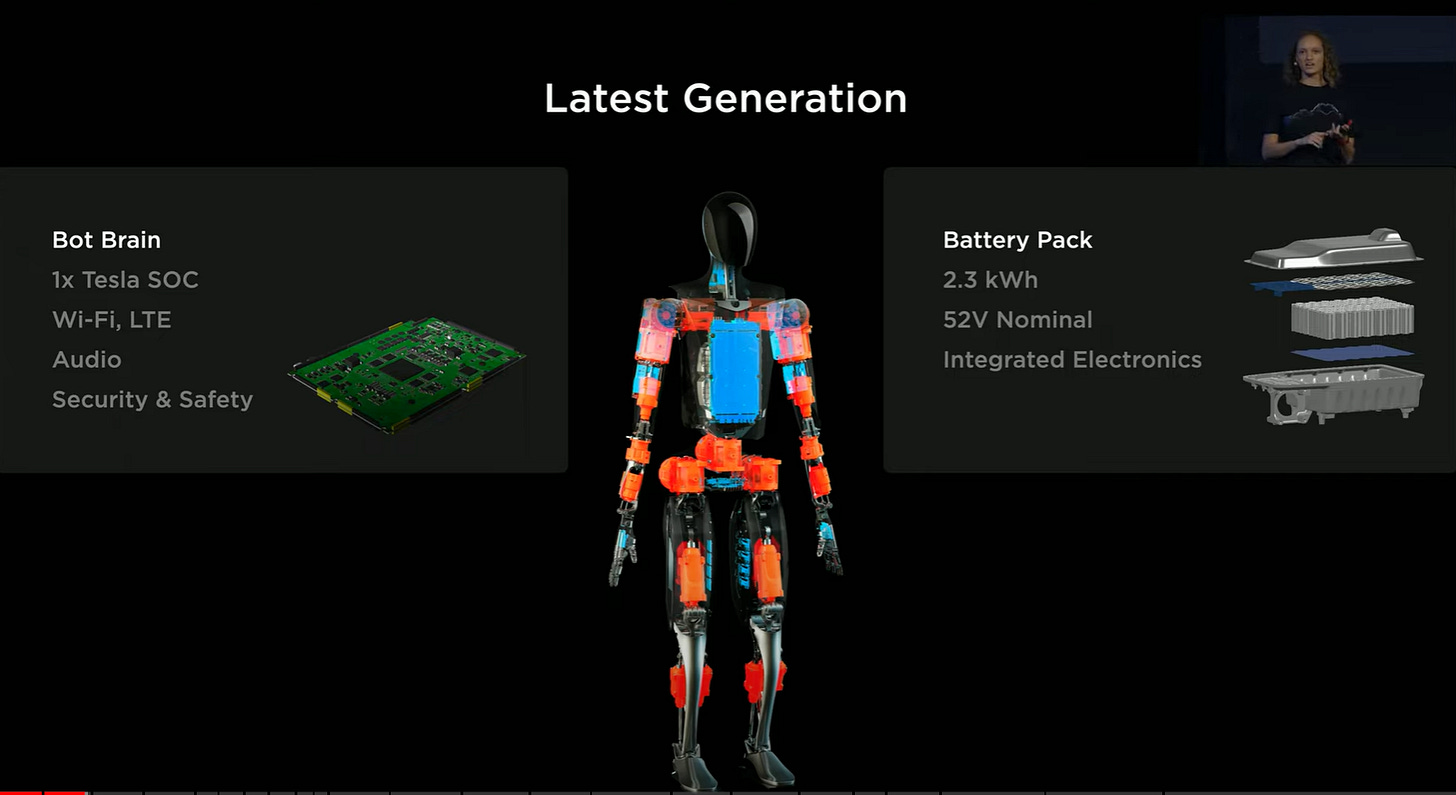

This is the latest prototype of bot, which has 28 structural actuators, which are basically components that will help in movement (think of it as muscles and joints in our body, which transfer specific amount energy to move our joint with certain force). It will have a Battery pack of 2.3 kWh, 52V and power consumption will be 100W-500W (Sitting - Walking), which could run for about a full day’s worth of work.

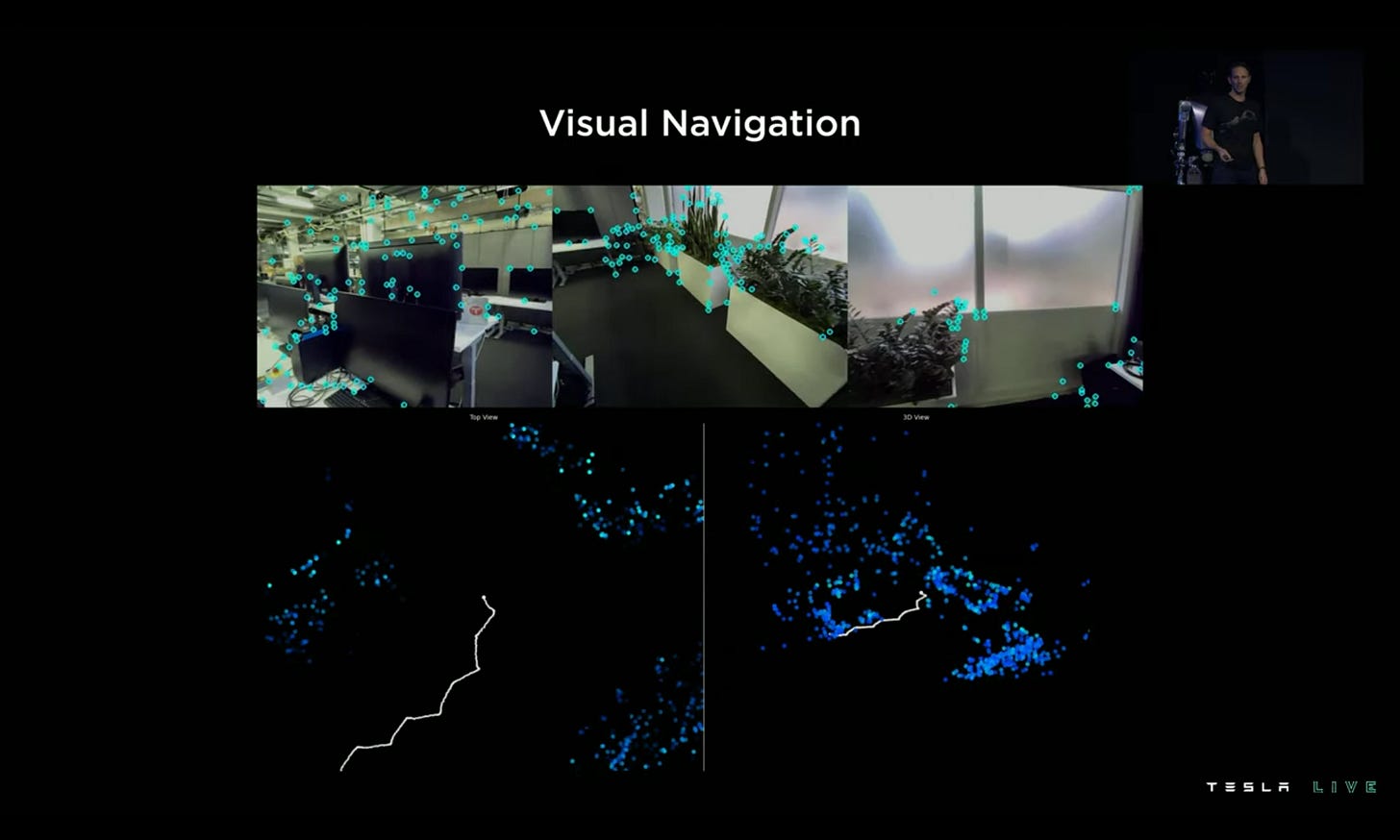

For the brain, it will be using Tesla’s SOC (System on chip) and a lot of already existing software and data models can be used to train the bot like below is the labelling model that is being used for navigation for bot.

My Thoughts

I found Dojo Supercomputer as the most interesting update from the event. A lot of companies are in the race to produce fully automotive vehicles across different geographies. Tesla sure seems like on a right track with aggressive strategies towards reaching their goals. It seems like the question is not about “if”, it’s only “when” some one will be able to build and scale fully trained AV in the market.

It is also amazing to see how new optionalities are building up in company, from DOJO Supercomputer (which can be used as a service like AWS to other companies to train data) to Humanoid Robots with AGI, seems like a very initial stage and probably will need another 3-5 years to achieve something. But nevertheless, very exiting times that we’re living in and will keep tracking the developments to see where we’ll end up with.

Let me know in comments, What do you make of this event and what strategy do you think will work for AV?

Until then,

Stay curious, Keep healthy !

All images are from Tesla AI Day 2021 and AI Day 2022

[Disclaimer: All information in this article is from publicly available information and personal opinions. This is not intended as, and shall not be understood or construed as , financial advice. It's very important to do your own analysis and reviewing the facts before making any investment based on your own personal circumstances. Kindly seek professional advice before taking investment decision.]